| Version 4 (modified by mitty, 15 years ago) (diff) |

|---|

make RAID5 disk 5 -> 7

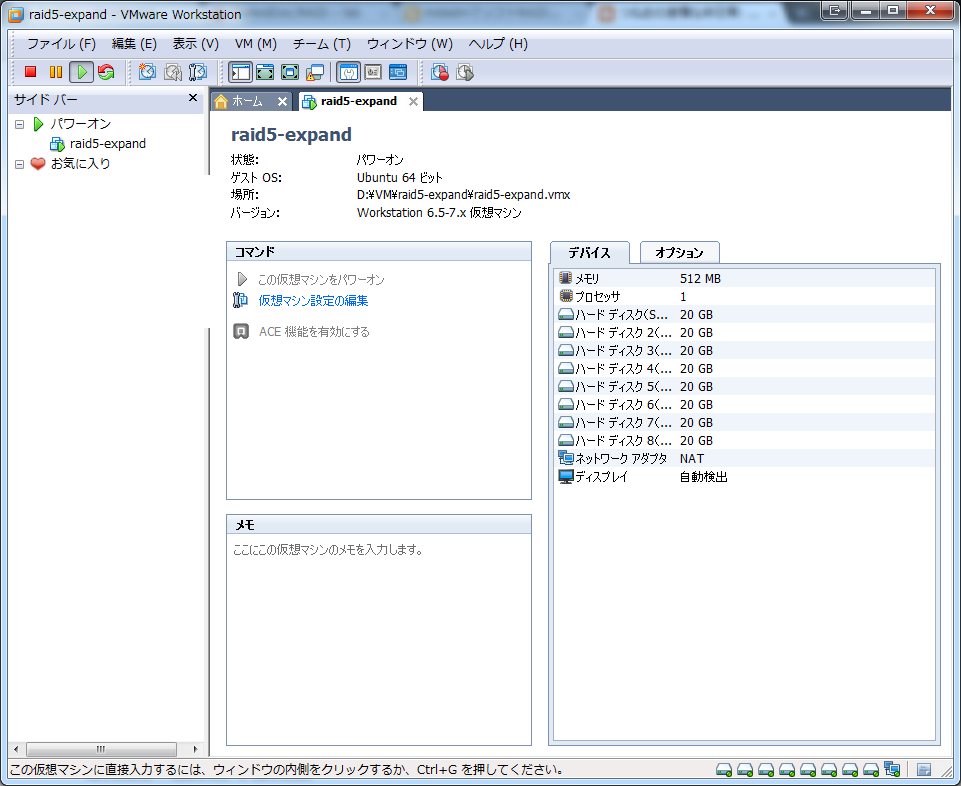

original devices

- mitty@raid5:~$ sudo fdisk -lu /dev/sd?

(snip) Device Boot Start End Blocks Id System /dev/sdb1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdc1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdd1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sde1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdf1 63 2097215 1048576+ fd Linux raid autodetect

create /dev/md0 with 5 disks

- mitty@raid5:~$ sudo mdadm --create /dev/md0 -n5 -l 5 /dev/sd[bcdef]1

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdf1[4] sde1[3] sdd1[2] sdc1[1] sdb1[0] 4194048 blocks level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

/dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 4194048 (4.00 GiB 4.29 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 5 Total Devices : 5 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 20:48:30 2011 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.34 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1

add to mdadm.conf

- mitty@raid5:~$ sudo mdadm -Ds

ARRAY /dev/md0 level=raid5 num-devices=5 metadata=00.90 UUID=b49fdd73:9af2093f:0dfb77a3:1c88c57c

- mitty@raid5:~$ sudo sh -c "mdadm -Ds >> /etc/mdadm/mdadm.conf"

mkfs and mount

- mitty@raid5:~$ sudo mkfs.xfs /dev/md0

- mitty@raid5:~$ sudo blkid /dev/md0

/dev/md0: UUID="15329342-a08c-4658-9b9d-53568eef427d" TYPE="xfs"

- mitty@raid5:~$ sudo mkdir /var/raid

- mitty@raid5:~$ sudo mount /dev/md0 /var/raid/

- データが壊れないことの検証用データ

- mitty@raid5:~$ sudo dd if=/dev/urandom of=/var/raid/1G bs=1024 count=1048576

- mitty@raid5:~$ sha1sum -b /var/raid/1G | sudo tee /var/raid/1G.sha1

c3f03a59a865c1aea6455f8838479614e9225f48 */var/raid/1G

- mitty@raid5:~$ df -h /var/raid/

Filesystem Size Used Avail Use% Mounted on /dev/md0 4.0G 1.1G 3.0G 26% /var/raid

attach new 2 disks

- mitty@raid5:~$ sudo fdisk -lu /dev/sd?

(snip) Device Boot Start End Blocks Id System /dev/sdb1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdc1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdd1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sde1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdf1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdg1 63 2097215 1048576+ fd Linux raid autodetect (snip) Device Boot Start End Blocks Id System /dev/sdh1 63 2097215 1048576+ fd Linux raid autodetect

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdc1[1] sdf1[4] sdb1[0] sdd1[2] sde1[3] 4194048 blocks level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 4194048 (4.00 GiB 4.29 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 5 Total Devices : 5 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 21:24:55 2011 State : clean Active Devices : 5 Working Devices : 5 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.40 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1

add 1 of 2 disk2

- mitty@raid5:~$ sudo mdadm /dev/md0 -a /dev/sdg1

mdadm: metadata format 00.90 unknown, ignored. mdadm: added /dev/sdg1

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdg1[5](S) sdc1[1] sdf1[4] sdb1[0] sdd1[2] sde1[3] 4194048 blocks level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 4194048 (4.00 GiB 4.29 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 5 Total Devices : 6 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 21:36:40 2011 State : clean Active Devices : 5 Working Devices : 6 Failed Devices : 0 Spare Devices : 1 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.42 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 97 - spare /dev/sdg1

add 2 of 2 disks

- mitty@raid5:~$ sudo mdadm /dev/md0 -a /dev/sdh1

mdadm: metadata format 00.90 unknown, ignored. mdadm: added /dev/sdh1

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdh1[5](S) sdg1[6](S) sdc1[1] sdf1[4] sdb1[0] sdd1[2] sde1[3] 4194048 blocks level 5, 64k chunk, algorithm 2 [5/5] [UUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 4194048 (4.00 GiB 4.29 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 5 Total Devices : 7 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 21:37:28 2011 State : clean Active Devices : 5 Working Devices : 7 Failed Devices : 0 Spare Devices : 2 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.44 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 - spare /dev/sdh1 6 8 97 - spare /dev/sdg1

grow array

cannot make RAID5 to RAID6

- RAID5に後からディスクを追加してRAID6にする機能は(現在は)実装されていない模様

- mitty@raid5:~$ sudo mdadm --grow /dev/md0 -l6 -n7

mdadm: metadata format 00.90 unknown, ignored. mdadm: Need to backup 1280K of critical section.. mdadm: Cannot set device size/shape for /dev/md0: Invalid argument

- mitty@raid5:~$ tail /var/log/kern.log

Jun 4 21:55:44 raid5 kernel: [ 1842.255232] md: couldn't update array info. -22

- mitty@raid5:~$ man mdadm

(snip) -l, --level= (snip) Not yet supported with --grow.

RAID5 5 -> 7 disks

- mitty@raid5:~$ sudo mdadm --grow /dev/md0 -n7

mdadm: metadata format 00.90 unknown, ignored. mdadm: Need to backup 768K of critical section.. mdadm: ... critical section passed.

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdh1[5] sdg1[6] sdc1[1] sdf1[4] sdb1[0] sdd1[2] sde1[3] 4194048 blocks super 0.91 level 5, 64k chunk, algorithm 2 [7/7] [UUUUUUU] [>....................] reshape = 0.3% (4160/1048512) finish=25.0min speed=693K/sec unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.91 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 4194048 (4.00 GiB 4.29 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 7 Total Devices : 7 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 21:56:24 2011 State : clean, recovering Active Devices : 7 Working Devices : 7 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Reshape Status : 2% complete Delta Devices : 2, (5->7) UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.74 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 5 active sync /dev/sdh1 6 8 97 6 active sync /dev/sdg1 - mitty@raid5:~$ tail /var/log/kern.log

Jun 4 21:55:59 raid5 kernel: [ 1857.490635] RAID5 conf printout: Jun 4 21:55:59 raid5 kernel: [ 1857.490640] --- rd:7 wd:7 Jun 4 21:55:59 raid5 kernel: [ 1857.490642] disk 0, o:1, dev:sdb1 Jun 4 21:55:59 raid5 kernel: [ 1857.490644] disk 1, o:1, dev:sdc1 Jun 4 21:55:59 raid5 kernel: [ 1857.490646] disk 2, o:1, dev:sdd1 Jun 4 21:55:59 raid5 kernel: [ 1857.490648] disk 3, o:1, dev:sde1 Jun 4 21:55:59 raid5 kernel: [ 1857.490650] disk 4, o:1, dev:sdf1 Jun 4 21:55:59 raid5 kernel: [ 1857.490652] disk 5, o:1, dev:sdh1 Jun 4 21:55:59 raid5 kernel: [ 1857.490665] RAID5 conf printout: Jun 4 21:55:59 raid5 kernel: [ 1857.490666] --- rd:7 wd:7 Jun 4 21:55:59 raid5 kernel: [ 1857.490668] disk 0, o:1, dev:sdb1 Jun 4 21:55:59 raid5 kernel: [ 1857.490670] disk 1, o:1, dev:sdc1 Jun 4 21:55:59 raid5 kernel: [ 1857.490672] disk 2, o:1, dev:sdd1 Jun 4 21:55:59 raid5 kernel: [ 1857.490674] disk 3, o:1, dev:sde1 Jun 4 21:55:59 raid5 kernel: [ 1857.490675] disk 4, o:1, dev:sdf1 Jun 4 21:55:59 raid5 kernel: [ 1857.490677] disk 5, o:1, dev:sdh1 Jun 4 21:55:59 raid5 kernel: [ 1857.490679] disk 6, o:1, dev:sdg1 Jun 4 21:55:59 raid5 kernel: [ 1857.491421] md: reshape of RAID array md0 Jun 4 21:55:59 raid5 kernel: [ 1857.491428] md: minimum _guaranteed_ speed: 1000 KB/sec/disk. Jun 4 21:55:59 raid5 kernel: [ 1857.491431] md: using maximum available idle IO bandwidth (but not more than 200000 KB/sec) for reshape. Jun 4 21:55:59 raid5 kernel: [ 1857.491443] md: using 128k window, over a total of 1048512 blocks.

reshape done

- mitty@raid5:~$ tail /var/log/kern.log

Jun 4 22:01:26 raid5 kernel: [ 2184.441331] md: md0: reshape done. Jun 4 22:01:26 raid5 kernel: [ 2184.448127] RAID5 conf printout: Jun 4 22:01:26 raid5 kernel: [ 2184.448130] --- rd:7 wd:7 Jun 4 22:01:26 raid5 kernel: [ 2184.448132] disk 0, o:1, dev:sdb1 Jun 4 22:01:26 raid5 kernel: [ 2184.448134] disk 1, o:1, dev:sdc1 Jun 4 22:01:26 raid5 kernel: [ 2184.448136] disk 2, o:1, dev:sdd1 Jun 4 22:01:26 raid5 kernel: [ 2184.448138] disk 3, o:1, dev:sde1 Jun 4 22:01:26 raid5 kernel: [ 2184.448140] disk 4, o:1, dev:sdf1 Jun 4 22:01:26 raid5 kernel: [ 2184.448141] disk 5, o:1, dev:sdh1 Jun 4 22:01:26 raid5 kernel: [ 2184.448143] disk 6, o:1, dev:sdg1 Jun 4 22:01:26 raid5 kernel: [ 2184.448154] md0: detected capacity change from 4294705152 to 6442057728 Jun 4 22:01:26 raid5 kernel: [ 2184.489608] VFS: busy inodes on changed media or resized disk md0

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdh1[5] sdg1[6] sdc1[1] sdf1[4] sdb1[0] sdd1[2] sde1[3] 6291072 blocks level 5, 64k chunk, algorithm 2 [7/7] [UUUUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 6291072 (6.00 GiB 6.44 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 7 Total Devices : 7 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sat Jun 4 22:02:10 2011 State : active Active Devices : 7 Working Devices : 7 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.137 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 5 active sync /dev/sdh1 6 8 97 6 active sync /dev/sdg1

xfs_growfs

- mitty@raid5:~$ df -h /var/raid/

Filesystem Size Used Avail Use% Mounted on /dev/md0 4.0G 1.1G 3.0G 26% /var/raid

- mitty@raid5:~$ mount

/dev/md0 on /var/raid type xfs (rw)

- mitty@raid5:~$ sudo xfs_growfs /var/raid/

meta-data=/dev/md0 isize=256 agcount=8, agsize=131056 blks = sectsz=4096 attr=2 data = bsize=4096 blocks=1048448, imaxpct=25 = sunit=16 swidth=64 blks naming =version 2 bsize=4096 ascii-ci=0 log =internal bsize=4096 blocks=2560, version=2 = sectsz=4096 sunit=1 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 data blocks changed from 1048448 to 1572768

- mitty@raid5:~$ df -h /var/raid/

Filesystem Size Used Avail Use% Mounted on /dev/md0 6.0G 1.1G 5.0G 17% /var/raid

check data

- mitty@raid5:~$ sha1sum -c /var/raid/1G.sha1

/var/raid/1G: OK

- mitty@raid5:~$ sudo mdadm -Ds

mdadm: metadata format 00.90 unknown, ignored. ARRAY /dev/md0 level=raid5 num-devices=7 metadata=00.90 UUID=b49fdd73:9af2093f:0dfb77a3:1c88c57c

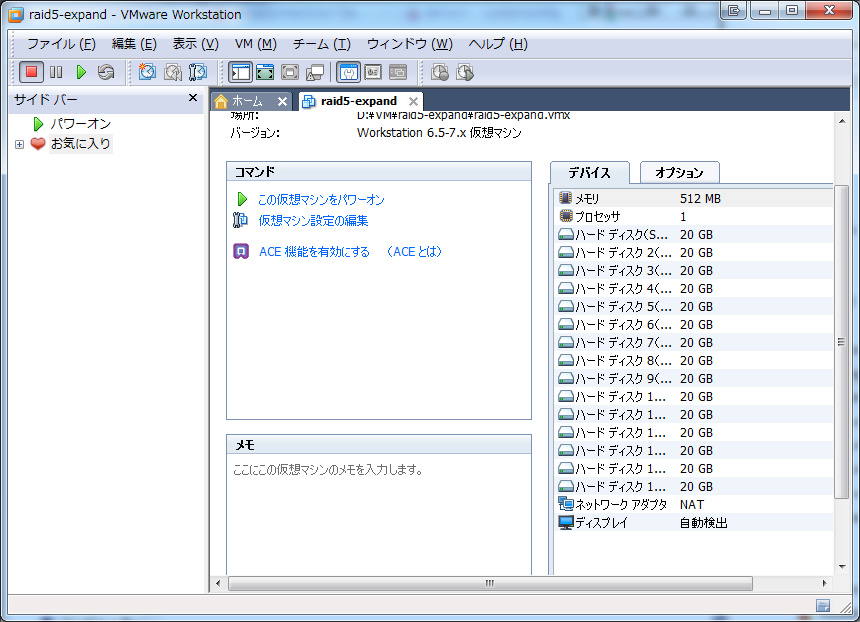

7 -> 14 disks

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdf1[4] sdg1[6] sdd1[2] sdh1[5] sde1[3] sdc1[1] sdb1[0] 6291072 blocks level 5, 64k chunk, algorithm 2 [7/7] [UUUUUUU] unused devices: <none>

add disks

- mitty@raid5:~$ sudo mdadm /dev/md0 -a /dev/sd[ijklmno]1

mdadm: metadata format 00.90 unknown, ignored. mdadm: added /dev/sdi1 mdadm: added /dev/sdj1 mdadm: added /dev/sdk1 mdadm: added /dev/sdl1 mdadm: added /dev/sdm1 mdadm: added /dev/sdn1 mdadm: added /dev/sdo1

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdo1[7](S) sdn1[8](S) sdm1[9](S) sdl1[10](S) sdk1[11](S) sdj1[12](S) sdi1[13](S) sdf1[4] sdg1[6] sdd1[2] sdh1[5] sde1[3] sdc1[1] sdb1[0] 6291072 blocks level 5, 64k chunk, algorithm 2 [7/7] [UUUUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 6291072 (6.00 GiB 6.44 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 7 Total Devices : 14 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sun Jun 5 09:14:36 2011 State : clean Active Devices : 7 Working Devices : 14 Failed Devices : 0 Spare Devices : 7 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.150 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 5 active sync /dev/sdh1 6 8 97 6 active sync /dev/sdg1 7 8 225 - spare /dev/sdo1 8 8 209 - spare /dev/sdn1 9 8 193 - spare /dev/sdm1 10 8 177 - spare /dev/sdl1 11 8 161 - spare /dev/sdk1 12 8 145 - spare /dev/sdj1 13 8 129 - spare /dev/sdi1

grow array

- mitty@raid5:~$ sudo mdadm --grow /dev/md0 -n14

mdadm: metadata format 00.90 unknown, ignored. mdadm: Need to backup 832K of critical section.. mdadm: ... critical section passed.

- mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.91 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 6291072 (6.00 GiB 6.44 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 14 Total Devices : 14 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sun Jun 5 09:16:21 2011 State : clean, recovering Active Devices : 14 Working Devices : 14 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K Reshape Status : 0% complete Delta Devices : 7, (7->14) UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.164 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 5 active sync /dev/sdh1 6 8 97 6 active sync /dev/sdg1 7 8 225 7 active sync /dev/sdo1 8 8 209 8 active sync /dev/sdn1 9 8 193 9 active sync /dev/sdm1 10 8 177 10 active sync /dev/sdl1 11 8 161 11 active sync /dev/sdk1 12 8 145 12 active sync /dev/sdj1 13 8 129 13 active sync /dev/sdi1 - mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdo1[7] sdn1[8] sdm1[9] sdl1[10] sdk1[11] sdj1[12] sdi1[13] sdf1[4] sdg1[6] sdd1[2] sdh1[5] sde1[3] sdc1[1] sdb1[0] 6291072 blocks super 0.91 level 5, 64k chunk, algorithm 2 [14/14] [UUUUUUUUUUUUUU] [>....................] reshape = 0.6% (7068/1048512) finish=44.1min speed=392K/sec unused devices: <none>

reshape done

- mitty@raid5:~$ cat /proc/mdstat

Personalities : [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4] [raid10] md0 : active raid5 sdo1[7] sdn1[8] sdm1[9] sdl1[10] sdk1[11] sdj1[12] sdi1[13] sdf1[4] sdg1[6] sdd1[2] sdh1[5] sde1[3] sdc1[1] sdb1[0] 13630656 blocks level 5, 64k chunk, algorithm 2 [14/14] [UUUUUUUUUUUUUU] unused devices: <none> - mitty@raid5:~$ sudo mdadm -D /dev/md0

mdadm: metadata format 00.90 unknown, ignored. /dev/md0: Version : 00.90 Creation Time : Sat Jun 4 20:48:11 2011 Raid Level : raid5 Array Size : 13630656 (13.00 GiB 13.96 GB) Used Dev Size : 1048512 (1024.11 MiB 1073.68 MB) Raid Devices : 14 Total Devices : 14 Preferred Minor : 0 Persistence : Superblock is persistent Update Time : Sun Jun 5 09:21:17 2011 State : clean Active Devices : 14 Working Devices : 14 Failed Devices : 0 Spare Devices : 0 Layout : left-symmetric Chunk Size : 64K UUID : b49fdd73:9af2093f:0dfb77a3:1c88c57c (local to host raid5) Events : 0.226 Number Major Minor RaidDevice State 0 8 17 0 active sync /dev/sdb1 1 8 33 1 active sync /dev/sdc1 2 8 49 2 active sync /dev/sdd1 3 8 65 3 active sync /dev/sde1 4 8 81 4 active sync /dev/sdf1 5 8 113 5 active sync /dev/sdh1 6 8 97 6 active sync /dev/sdg1 7 8 225 7 active sync /dev/sdo1 8 8 209 8 active sync /dev/sdn1 9 8 193 9 active sync /dev/sdm1 10 8 177 10 active sync /dev/sdl1 11 8 161 11 active sync /dev/sdk1 12 8 145 12 active sync /dev/sdj1 13 8 129 13 active sync /dev/sdi1

xfs_growfs

- mitty@raid5:~$ sudo xfs_growfs /var/raid/

xfs_growfs: /var/raid/ is not a mounted XFS filesystem

- read-onlyでも良いので、mountが必要

- mitty@raid5:~$ sudo mount -r /dev/md0 /var/raid/

- mitty@raid5:~$ sudo xfs_growfs /var/raid/

meta-data=/dev/md0 isize=256 agcount=13, agsize=131056 blks = sectsz=4096 attr=2 data = bsize=4096 blocks=1572768, imaxpct=25 = sunit=16 swidth=64 blks naming =version 2 bsize=4096 ascii-ci=0 log =internal bsize=4096 blocks=2560, version=2 = sectsz=4096 sunit=1 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 data blocks changed from 1572768 to 3407664

- mitty@raid5:~$ mount

/dev/md0 on /var/raid type xfs (ro)

- mitty@raid5:~$ df -h /var/raid/

Filesystem Size Used Avail Use% Mounted on /dev/md0 13G 1.1G 12G 8% /var/raid

misc

- reshape中に再起動した場合、arrayの先頭からreshapeが再開される

- $ dmesg

[ 14.359376] raid5: reshape will continue (snip) [ 14.361290] ...ok start reshape thread

- $ dmesg

Attachments (3)

- attach7.png (71.4 KB) - added by mitty 15 years ago.

- attach14.png (72.1 KB) - added by mitty 15 years ago.

- access14.png (49.4 KB) - added by mitty 15 years ago.

Download all attachments as: .zip