| Version 16 (modified by mitty, 11 years ago) (diff) |

|---|

- LVM のスナップショット機能を使ってみる - いますぐ実践! Linuxシステム管理 / Vol.166

- @IT:LVMによる自動バックアップ・システムの構築(1/3)

- Chapter 11. Using LVM with DRBD

- ThinkIT 第9回:バックアップにおけるスナップショットの活用 (1/3)

- Tips/Linux/LVM - 福岡大学奥村研究室 - okkun-lab Pukiwiki!

- LVMパーティションの拡張

- arch:LVM そこそこ詳しい

- https://wiki.gentoo.org/wiki/LVM 非常に良くまとまっている

- ボリューム命名規則

- 先頭にハイフンは不可

- 「.」または「..」は不可(「.hoge」などは可)

- 英数字および「.」「_」「+」は可

- 128文字未満

- ただし、実際にはもう少し短い必要がある https://git.fedorahosted.org/cgit/lvm2.git/tree/lib/metadata/metadata.c#n2597 vg_validate()

- vgs/lvs のAttrの意味

- https://git.fedorahosted.org/cgit/lvm2.git/tree/lib/metadata/vg.c#n639 vg_attr_dup()

- https://git.fedorahosted.org/cgit/lvm2.git/tree/lib/metadata/lv.c#n641 lv_attr_dup_with_info_and_seg_status()

lvchange/vgchange -ay

- root@Knoppix:~# vgdisplay -v

Finding all volume groups Finding volume group "vgnfs" --- Volume group --- VG Name vgnfs System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size 1.94 GB PE Size 32.00 MB Total PE 62 Alloc PE / Size 48 / 1.50 GB Free PE / Size 14 / 448.00 MB VG UUID I6vVoh-6gCJ-9uvA-v2MV-Fyva-7J8v-Cvftfi --- Logical volume --- LV Name /dev/vgnfs/drbd VG Name vgnfs LV UUID dNxdNj-hZCZ-GMrC-woMk-0hA2-f3oR-sBsrHI LV Write Access read/write LV Status NOT available LV Size 1.50 GB Current LE 48 Segments 1 Allocation inherit Read ahead sectors 0 --- Physical volumes --- PV Name /dev/md0 PV UUID Z2JXRP-fa5g-SYS5-xzMs-Lq8C-1Jbh-QPKihr PV Status allocatable Total PE / Free PE 62 / 14- LV is "NOT available"

- lvchange -ay / vgchange -ay によってavailableにする

- root@Knoppix:~# lvchange -ay /dev/vgnfs/drbd

- root@Knoppix:~# vgchange -ay

1 logical volume(s) in volume group "vgnfs" now active

- Debianのリカバリー (ゆうちくりんの忘却禄)

- root@Knoppix:~# vgdisplay -v

Finding all volume groups Finding volume group "vgnfs" --- Volume group --- VG Name vgnfs System ID Format lvm2 Metadata Areas 1 Metadata Sequence No 2 VG Access read/write VG Status resizable MAX LV 0 Cur LV 1 Open LV 0 Max PV 0 Cur PV 1 Act PV 1 VG Size 1.94 GB PE Size 32.00 MB Total PE 62 Alloc PE / Size 48 / 1.50 GB Free PE / Size 14 / 448.00 MB VG UUID I6vVoh-6gCJ-9uvA-v2MV-Fyva-7J8v-Cvftfi --- Logical volume --- LV Name /dev/vgnfs/drbd VG Name vgnfs LV UUID dNxdNj-hZCZ-GMrC-woMk-0hA2-f3oR-sBsrHI LV Write Access read/write LV Status available # open 0 LV Size 1.50 GB Current LE 48 Segments 1 Allocation inherit Read ahead sectors 0 Block device 254:0 --- Physical volumes --- PV Name /dev/md0 PV UUID Z2JXRP-fa5g-SYS5-xzMs-Lq8C-1Jbh-QPKihr PV Status allocatable Total PE / Free PE 62 / 14

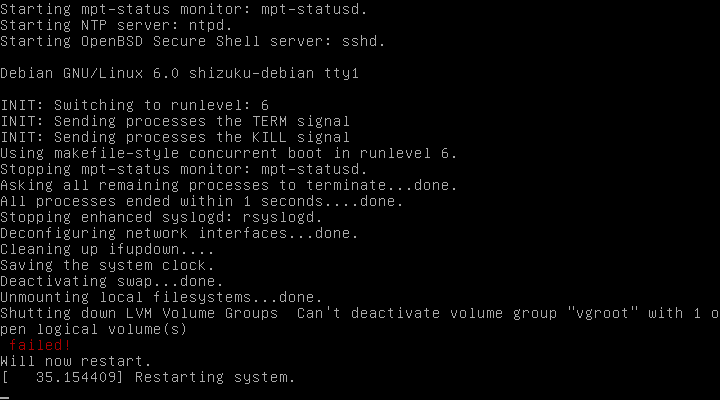

Can't deactivate

- Shutting down LVM Volume Groups Can't deactivate volume group "vgroot" with 1 open logical volumes(s) failed!

- Debian 6.0 with 2.6.39-bpo.2-amd64

- lvm2: 2.02.66-5

- mitty@shizuku-debian:~$ ls /etc/rc6.d/ -l

lrwxrwxrwx 1 root root 21 Oct 22 16:11 K01mpt-statusd -> ../init.d/mpt-statusd lrwxrwxrwx 1 root root 17 Oct 22 16:07 K01urandom -> ../init.d/urandom lrwxrwxrwx 1 root root 18 Oct 22 16:11 K02sendsigs -> ../init.d/sendsigs lrwxrwxrwx 1 root root 17 Oct 22 16:11 K03rsyslog -> ../init.d/rsyslog lrwxrwxrwx 1 root root 20 Oct 22 16:11 K04hwclock.sh -> ../init.d/hwclock.sh lrwxrwxrwx 1 root root 22 Oct 22 16:11 K04umountnfs.sh -> ../init.d/umountnfs.sh lrwxrwxrwx 1 root root 20 Oct 22 16:11 K05networking -> ../init.d/networking lrwxrwxrwx 1 root root 18 Oct 22 16:11 K06ifupdown -> ../init.d/ifupdown lrwxrwxrwx 1 root root 18 Oct 22 16:11 K07umountfs -> ../init.d/umountfs lrwxrwxrwx 1 root root 14 Oct 22 16:11 K08lvm2 -> ../init.d/lvm2 lrwxrwxrwx 1 root root 20 Oct 22 16:11 K09umountroot -> ../init.d/umountroot lrwxrwxrwx 1 root root 16 Oct 22 16:11 K10reboot -> ../init.d/reboot -rw-r--r-- 1 root root 351 Jan 1 2011 README

- #466141 - lvm2: error message during shutdown (Can't deactivate volume group...) - Debian Bug report logs > This is not a bug

LVM RAID

- raid - RAIDing with LVM vs MDRAID - pros and cons? - Unix & Linux Stack Exchange

- Debian Wheezy/Jessie での例が詳しく載っている。LVM-RAIDについては情報が見つけにくいというのは現時点(2015/05)でもあまり変わらないように思える

- LVM-RAIDは実体としてはMD-RAIDと同じらしい

- 4.4.15. RAID 論理ボリューム

- RHELでLVM-RAIDを使う際のマニュアル。「Red Hat Enterprise Linux 6.3 リリースでは、LVM は RAID4/5/6 およびミラーリングの新実装をサポートしています。」とある。

- 6.3. LVM ミラー障害からの回復

- このページの説明は、古い実装とされている4.4.3. ミラー化ボリュームの作成からリンクされているので、新実装で当てはまるのか不明

- なお、未翻訳のRHEL 7版でもほぼ同じ章立てになっている Logical Volume Manager Administration

- https://wiki.gentoo.org/wiki/LVM#Different_storage_allocation_methods

It is not possible to stripe an existing volume, nor reshape the stripes across more/less physical volumes, nor to convert to a different RAID level/linear volume. A stripe set can be mirrored. It is possible to extend a stripe set across additional physical volumes, but they must be added in multiples of the original stripe set (which will effectively linearly append a new stripe set).

- RAID1,4,5,6でreshapeなどが使えないのは結構厳しい制限に思える。MD-RAIDでは可能なので、そのうちサポートされるかも知れない

LVM with MD-RAID

- mdadm と LVM で作る、全手動 BeyondRAID もどき - 守破離

ざっくり言うと、RAID1 か RAID5 でHDDを横断する領域をいくつか作って、その領域をまとめあげる事で大容量のストレージを作る感じですね。 これのイケてる点は HDD が 1 台消えても大丈夫な上に、状況によっては HDD を 1 台交換するだけでストレージの容量がアップすること。 最低 2 台の HDD を大容量の物に交換すれば確実に容量が増えます。

Thin provisioning

- https://www.kernel.org/doc/Documentation/device-mapper/thin-provisioning.txt

These targets are very much still in the EXPERIMENTAL state. Please do not yet rely on them in production.

- https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Logical_Volume_Manager_Administration/LV.html#thinly_provisioned_volume_creation

- https://wiki.gentoo.org/wiki/LVM#Thin_provisioning 非常に良くまとまっている

- (CentOS) LVM thinpool snapshots broken in 6.5?

For the people who run into this as well: This is apparently a feature and not a bug. Thin provisioning snapshots are no longer automatically activated and a "skip activation" flag is set during creation by default. One has to add the "-K" option to "lvchange -ay <snapshot-volume>" to have lvchange ignore this flag and activate the volume for real. "-k" can be used on lvcreate to not add this flag to the volume. See man lvchange/lvcreate for more details. /etc/lvm/lvm.conf also contains a "auto_set_activation_skip" option now that controls this.

Apparently this was changed in 6.5 but the changes were not mentioned in the release notes.

- thin snapshotはデフォルトではinactiveで作成され、activateするにはlvchangeに'-K'オプションが必要となった('-k y -K'とするのが良さそう)

metadata

- http://man7.org/linux/man-pages/man7/lvmthin.7.html metadataを直接操作する方法なども記述されている

- (linux-lvm) how to recover after thin pool metadata did fill up? 古いバージョンのlvm2ではmetadataのリサイズが出来ない模様

- http://www.redhat.com/archives/linux-lvm/2012-October/msg00033.html

With 3.7 kernel and the next release of lvm2 (2.02.99) it's expected full support for live size extension of metadata device.

- http://www.redhat.com/archives/linux-lvm/2012-October/msg00033.html

- dm-thin: issues about resize the pool metadata size

- metadataを大幅に大きくしようとすると失敗する事例 (3.12.0-rc7, lvm2 2.02.103)

- dm-thin-internal-ja dm-thin実装調査

/etc/lvm/lvm.conf

error_when_full

- 0 (default)

- thin poolが一杯になると、書き込みはキューイングされる。thin poolはout-of-data-space modeになる

May 10 12:35:10 raid-test lvm[303]: Thin vg-pool0-tpool is now 96% full. May 10 12:40:36 raid-test kernel: device-mapper: thin: 252:2: reached low water mark for data device: sending event. May 10 12:40:36 raid-test kernel: device-mapper: thin: 252:2: switching pool to out-of-data-space mode May 10 12:40:36 raid-test lvm[303]: Thin vg-pool0-tpool is now 100% full.

- #l vs -a -o+lv_when_full

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert WhenFull lv1 vg Vwi-aotz-- 3.00g pool0 66.67 pool0 vg twi-aotzD- 2.00g 100.00 25.98 queue

- (デフォルトでは)60秒、thin poolが拡張されるのを待ち、失敗するとファイルシステムへエラーを返す。thin poolは read-only modeになる

May 10 12:41:36 raid-test kernel: device-mapper: thin: 252:2: switching pool to read-only mode May 10 12:41:36 raid-test kernel: EXT4-fs warning (device dm-8): ext4_end_bio:317: I/O error -5 writing to inode 17 (offset 25165824 size 8388608 starting block 591856) May 10 12:41:36 raid-test kernel: buffer_io_error: 38109 callbacks suppressed May 10 12:41:36 raid-test kernel: Buffer I/O error on device dm-8, logical block 591856 May 10 12:41:36 raid-test kernel: EXT4-fs warning (device dm-8): ext4_end_bio:317: I/O error -5 writing to inode 17 (offset 25165824 size 8388608 starting block 591857) May 10 12:41:36 raid-test kernel: Buffer I/O error on device dm-8, logical block 591857 (snip) May 10 12:41:36 raid-test kernel: device-mapper: thin: 252:2: metadata operation 'dm_pool_commit_metadata' failed: error = -1 May 10 12:41:36 raid-test kernel: device-mapper: thin: 252:2: aborting current metadata transaction May 10 12:41:36 raid-test kernel: device-mapper: thin: 252:2: switching pool to read-only mode

- # lvs -a -o+lv_when_full

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert WhenFull lv1 vg Vwi-aotz-- 3.00g pool0 66.67 pool0 vg twi-aotzM- 2.00g 100.00 25.98 queue

- read-onlyから復旧するには、全てのthin LVをinactivate -> thin pool LVをinactivate -> lvconvert --repair thinpoolLV する必要がある

- thin poolが一杯になると、書き込みはキューイングされる。thin poolはout-of-data-space modeになる

- 1

- thin poolが一杯になると、書き込みは即座に失敗する。

May 10 12:53:17 raid-test kernel: device-mapper: thin: 252:2: reached low water mark for data device: sending event. May 10 12:53:17 raid-test kernel: device-mapper: thin: 252:2: switching pool to out-of-data-space mode May 10 12:53:17 raid-test kernel: EXT4-fs warning (device dm-8): ext4_end_bio:317: I/O error -28 writing to inode 17 (offset 33554432 size 8388608 starting block 591872) May 10 12:53:17 raid-test kernel: buffer_io_error: 39292 callbacks suppressed May 10 12:53:17 raid-test kernel: Buffer I/O error on device dm-8, logical block 591872 May 10 12:53:17 raid-test kernel: EXT4-fs warning (device dm-8): ext4_end_bio:317: I/O error -28 writing to inode 17 (offset 33554432 size 8388608 starting block 591873) (snip) May 10 12:53:17 raid-test lvm[303]: Thin vg-pool0-tpool is now 100% full. May 10 12:53:27 raid-test kernel: EXT4-fs warning: 135366 callbacks suppressed May 10 12:53:27 raid-test kernel: EXT4-fs warning (device dm-8): ext4_end_bio:317: I/O error -28 writing to inode 17 (offset 588054528 size 4857856 starting block 727248) May 10 12:53:27 raid-test kernel: buffer_io_error: 135366 callbacks suppressed (snip)

- # lvs -a -o+lv_when_full

lv1 vg Vwi-aotz-- 3.00g pool0 66.67 pool0 vg twi-aotzD- 2.00g 100.00 26.86 error

- fstrimなどを用いて、割り当てを解放すると、すぐに復旧する

May 10 12:55:29 raid-test kernel: device-mapper: thin: 252:2: switching pool to write mode May 10 12:55:29 raid-test kernel: device-mapper: thin: 252:2: switching pool to write mode

- # lvs -a -o+lv_when_full

lv1 vg Vwi-aotz-- 3.00g pool0 65.62 pool0 vg twi-aotz-- 2.00g 98.44 26.46 error

- thin poolが一杯になると、書き込みは即座に失敗する。

- オンラインで即座に変更可能

- # lvchange --errorwhenfull y vg/pool0

Logical volume "pool0" changed.

- # lvchange --errorwhenfull y vg/pool0

Attachments (1)

- deactivate.png (5.1 KB) - added by mitty 14 years ago.

Download all attachments as: .zip